🧼 Pre-commit: Because “oops, forgot to format” is so last year

As a solo developer, I wear all the hats. 🎩👷♂️🎨

That includes the very boring Quality Assurance Hat™ — the one that says “yes, Amedee, you do need to check for trailing whitespace again.”

And honestly? I suck at remembering those little details. I’d rather be building cool stuff than remembering to run Black or fix a missing newline. So I let my robot friend handle it.

That friend is called pre-commit. And it’s the best personal assistant I never hired. 🤖

🧐 What is this thing?

Pre-commit is like a bouncer for your Git repo. Before your code gets into the club (your repo), it gets checked at the door:

“Whoa there — trailing whitespace? Not tonight.”

“Missing a newline at the end? Try again.”

“That YAML looks sketchy, pal.”

“You really just tried to commit a 200MB video file? What is this, Dropbox?”

“Leaking AWS keys now, are we? Security says nope.”

“Commit message says ‘fix’? That’s not a message, that’s a shrug.”

Pre-commit runs a bunch of little scripts called hooks to catch this stuff. You choose which ones to use — it’s modular, like Lego for grown-up devs. 🧱

When I commit, the hooks run. If they don’t like what they see, the commit gets bounced.

No exceptions. No drama. Just “fix it and try again.”

Is it annoying? Yeah, sometimes.

But has it saved my butt from pushing broken or embarrassing code? Way too many times.

🎯 Why I bother (as a hobby dev)

I don’t have teammates yelling at me in code reviews. I am the teammate.

And future-me is very forgetful. 🧓

Pre-commit helps me:

- 📏 Keep my code consistent

- 💣 It catches dumb mistakes before I make them permanent.

- 🕒 Spend less time cleaning up

- 💼 Feel a little more “pro” even when I’m hacking on toy projects

- 🧬 It works with any language. Even Bash, if you’re that kind of person.

Also, it feels kinda magical when it auto-fixes stuff and the commit just… works.

🛠 Installing it with pipx (because I’m not a barbarian)

I’m not a fan of polluting my Python environment, so I use pipx to keep things tidy. It installs CLI tools globally, but keeps them isolated.

If you don’t have pipx yet:

python3 -m pip install --user pipx

pipx ensurepath

Then install pre-commit like a boss:

pipx install pre-commit

Boom. It’s installed system-wide without polluting your precious virtualenvs. Chef’s kiss. 👨🍳💋

📝 Setting it up

Inside my project (usually some weird half-finished script I’ll obsess over for 3 days and then forget for 3 months), I create a file called .pre-commit-config.yaml.

Here’s what mine usually looks like:

repos:

- repo: https://github.com/pre-commit/pre-commit-hooks

rev: v5.0.0

hooks:

- id: trailing-whitespace

- id: end-of-file-fixer

- id: check-yaml

- id: check-added-large-files

- repo: https://github.com/gitleaks/gitleaks

rev: v8.28.0

hooks:

- id: gitleaks

- repo: https://github.com/jorisroovers/gitlint

rev: v0.19.1

hooks:

- id: gitlint

- repo: https://gitlab.com/vojko.pribudic.foss/pre-commit-update

rev: v0.8.0

hooks:

- id: pre-commit-update

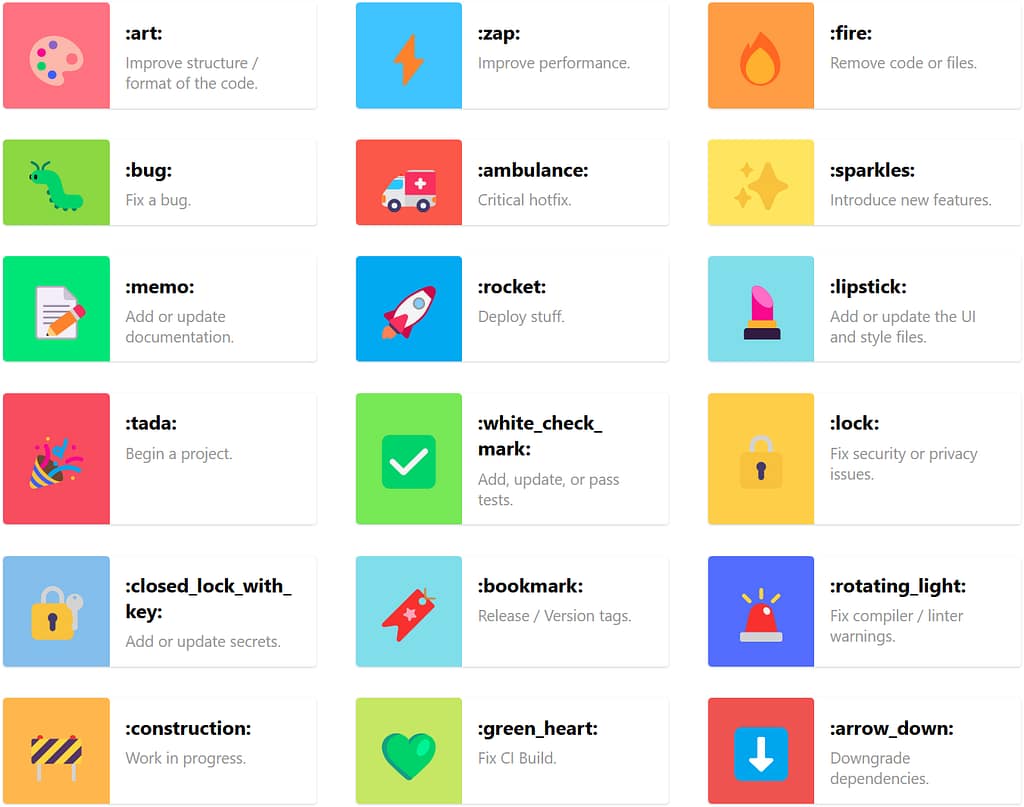

🧙♂️ What this pre-commit config actually does

You’re not just tossing some YAML in your repo and calling it a day. This thing pulls together a full-on code hygiene crew — the kind that shows up uninvited, scrubs your mess, locks up your secrets, and judges your commit messages like it’s their job. Because it is.

📦 pre-commit-hooks (v5.0.0)

These are the basics — the unglamorous chores that keep your repo from turning into a dumpster fire. Think lint roller, vacuum, and passive-aggressive IKEA manual rolled into one.

trailing-whitespace:

🚫 No more forgotten spaces at the end of lines. The silent killers of clean diffs.end-of-file-fixer:

👨⚕️ Adds a newline at the end of each file. Why? Because some tools (and nerds) get cranky if it’s missing.check-yaml:

🧪 Validates your YAML syntax. No more “why isn’t my config working?” only to discover you had an extra space somewhere.check-added-large-files:

🚨 Stops you from accidentally committing that 500MB cat video or.sqlitedump. Saves your repo. Saves your dignity.

🔐 gitleaks (v8.28.0)

Scans your code for secrets — API keys, passwords, tokens you really shouldn’t be committing.

Because we’ve all accidentally pushed our .env file at some point. (Don’t lie.)

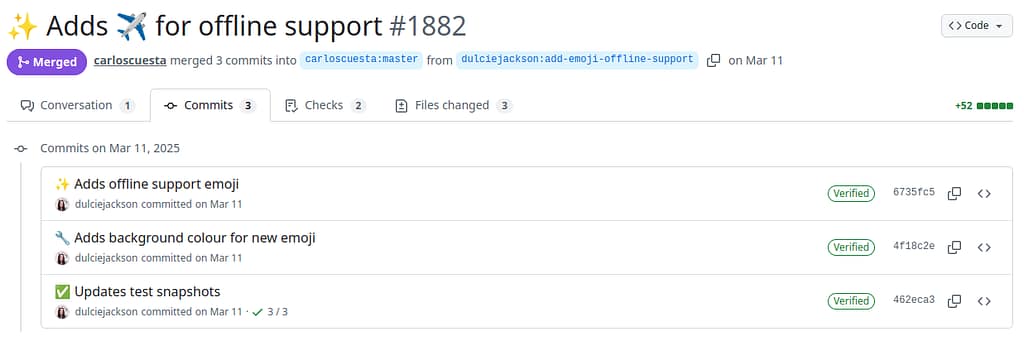

✍️ gitlint (v0.19.1)

Enforces good commit message style — like limiting subject line length, capitalizing properly, and avoiding messages like “asdf”.

Great if you’re trying to look like a serious dev, even when you’re mostly committing bugfixes at 2AM.

🔁 pre-commit-update (v0.8.0)

The responsible adult in the room. Automatically bumps your hook versions to the latest stable ones. No more living on ancient plugin versions.

🧼 In summary

This setup covers:

- ✅ Basic file hygiene (whitespace, newlines, YAML, large files)

- 🔒 Secret detection

- ✉️ Commit message quality

- 🆙 Keeping your hooks fresh

You can add more later, like linters specific for your language of choice — think of this as your “minimum viable cleanliness.”

🧩 What else can it do?

There are hundreds of hooks. Some I’ve used, some I’ve just admired from afar:

blackis a Python code formatter that says: “Shhh, I know better.”flake8finds bugs, smells, and style issues in Python.isortsorts your imports so you don’t have to.eslintfor all you JavaScript kids.shellcheckfor Bash scripts.- … or write your own custom one-liner hook!

You can browse tons of them at: https://pre-commit.com/hooks.html

🧙♀️ Make Git do your bidding

To hook it all into Git:

pre-commit install

Now every time you commit, your code gets a spa treatment before it enters version control. 💅

Wanna retroactively clean up the whole repo? Go ahead:

pre-commit run --all-files

You’ll feel better. I promise.

🎯 TL;DR

Pre-commit is a must-have.

It’s like brushing your teeth before a date: it’s fast, polite, and avoids awkward moments later. 🪥💋

If you haven’t tried it yet: do it. Your future self (and your Git history, and your date) will thank you. 🙏

Use pipx to install it globally.

Add a .pre-commit-config.yaml.

Install the Git hook.

Enjoy cleaner commits, fewer review comments — and a commit history you’re not embarrassed to bring home to your parents. 😌💍

And if it ever annoys you too much?

You can always disable it… like cancelling the date but still showing up in their Instagram story. 😈💔

git commit --no-verify

Want help writing your first config? Or customizing it for Python, Bash, JavaScript, Kotlin, or your one-man-band side project? I’ve been there. Ask away!